# Server Scaling in Node.js with cluster and child-process module

Scalability is the property of a system to handle a growing amount of work by adding resources to the system. Scaling a server involves optimizing the server to enable it to handle more requests without crashing and sacrificing latency.

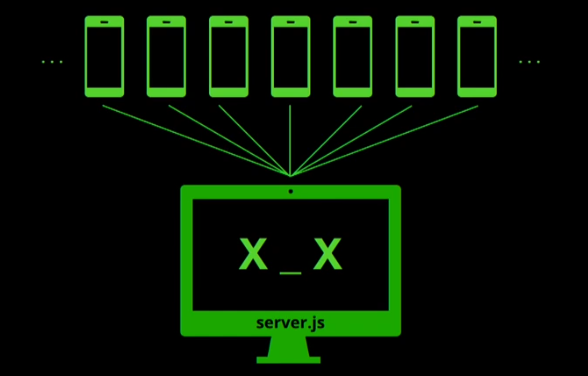

Node.js is a single-threaded language with a non-blocking performance that works great for a single process read more. But, one process in one CPU is not going to be enough to handle the increasing workload of your application. No matter how powerful your server, a single thread can only support a limited load.

The fact that Node runs in a single thread does not mean we can’t take advantage of multiple processes and, of course, many machines as well.

Using multiple processes is the best way to scale a Node application. Node is designed for building distributed applications with many nodes, hence, it’s name Node. Scalability is baked into the platform and it’s not something we need to start thinking about later in the lifetime of an application.

Conventionally, Node.js uses a single-threaded process block to serve HTTP requests which means tasks are done concurrently at the same period, having one task executed before another. This default behavior is fine but when your server begins to have increasing workload(many users trying to interact at the same time) then, your server might begin to experience some bottlenecks such as;

- Unable To Handle Increasing Number Of Requests

This scenario occurs when multiple requests are hitting the server in parallel, these requests will have to queue while the server executes them one at a time. Which most times results in poor and slow performance of the Node server.

- Hitting Huge Computation Endpoints

If the server hits a computational intensive endpoint, the Http server is stuck on the computation until it is done. Thereby, all other requests to the server at that period is kept waiting on the queue until the computation is done, If the computation takes too long, this may result to request timeout and also poor and slow performance.

By default we have concurrent but not parallel program execution paradigm in Javascript and NodeJs is not an exception. The code below shows the normal convention of setting up a node express server which will handle requests concurrently but not in parallel.

const express = require("express");

const dotenv = require("dotenv");

const app = express();

const port = process.env.PORT || 3000;

dotenv.config();

app.use(express.json());

app.get((req, resp, next) => {

// Handling some request here

});

const PORT = process.env.PORT || 3000;

app.listen(PORT, (err) => {

if (err) throw err;

console.log(`We are up at port ${PORT}`);

});

To tackle the bottlenecks highlighted above we will take advantage of our multicore processor by using the CLUSTER and CHILD-PROCESS module built-in NodeJs. How do we achieve this? Let's swing into action.

The problem of been unable to handle multiple requests can be mitigated by using the Cluster module built-in nodeJs. Now let's restructure our server to look as below.

const os = require("os");

const cluster = require("cluster");

if (cluster.isMaster) {

// GET THE NUMBER OF CPUS AVAILABLE

const num_of_cpu = os.cpus().length;

console.log(`Server forking ${num_of_cpu} CPUS`);

for (let i = 0; i < num_of_cpu; i++) {

cluster.fork();

}

} else {

const express = require("express");

const dotenv = require("dotenv");

const app = express();

// BODY PARSER

app.use(express.json());

//CONFIGURE ENVIRONMENT VARIABLE HOLDER

dotenv.config();

app.get((req, resp, next) => {

// Handling some request here

});

//LISTEN TO PORT

const PORT = process.env.PORT || 3000;

const pid = process.pid;

app.listen(PORT, (err) => {

if (err) throw err;

console.log(`Process ${pid} is listening to port ${PORT}`);

});

}

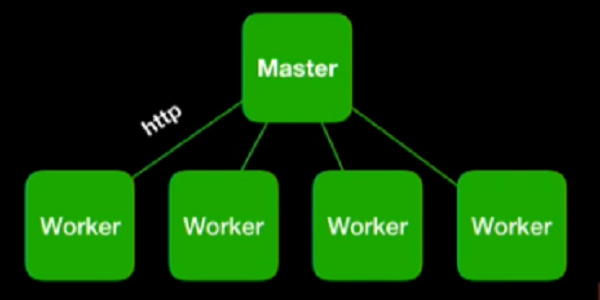

From the code above, we begin by getting hold of the operating system on the client's machine by including the os module, then we include the cluster module built-in NodeJs.

When the server is started, we use the isMaster method on the cluster module to determine if the current running process is the master process and if it is, we get the number of CPU cores on the client machine by calling the cpus() method on the os module. We then use a for loop to iterate for the number of available CPU cores and call the fork() method on the cluster module at each iteration to generate a child process(worker). if the process is not the master process, the code automatically makes an instance of our server to each of these workers or child processes. At this point, all workers are capable to handle any request to the server independently.

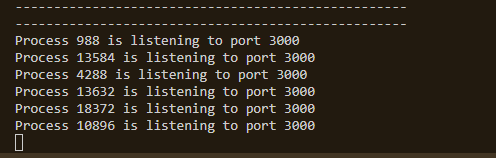

If you start your local server, the output on your console will look like the image below.

The number of child processes(workers) created here is relative to my processor, yours may be more or less than that. You can create as many child processes as you want by changing the constraint in the loop.

const child_process = 6;

for (let i = 0; i <= child_process; i++) {

cluster.fork();

}

Creating six child processes, this is not encouraged anyway, so it's fine to stick to the client's processor's ability.

What goes on behind the hood?

The master process by default uses the Round robin algorithm to schedule tasks to each thread(worker) base on their availability. The first request is sent to the first available worker and so on till all the workers are filled and subsequent requests are queued until any of the workers are available. This approach encourages parallel execution of requests by individual workers and reduces the waiting time of queued requests thereby increasing the number of requests the server can handle.

The second bottleneck of hitting a huge computation endpoint can be addressed by using the child-process module in nodeJs. The child-process module allows us to fork a new child process to handle a request or computation.

We will demonstrate this by creating a computationally intensive endpoint in our server and use the child-process module to handle the computation while other processes continue running on the parent node process. Meanwhile, we will also create an endpoint to handle the same computation without a child process for justification.

Now, Create a new js file in your root directory with the name bigComputation.js place the code below in it to mimic a huge computation.

let nums = 0;

for (let i = 0; i < 1e3; i++) {

console.log(i);

nums += i;

}

process.send(nums);

Then create another js file in your root directory and place the following code in it.

const express = require("express");

const dotenv = require("dotenv");

const { fork } = require("child_process");

const app = express();

const PORT = process.env.PORT || 3000;

// BODY PARSER

app.use(express.json());

//CONFIGURE ENVIRONMENT VARIABLE HOLDER

dotenv.config();

// Simple computation

app.get("/fast", (req, resp, next) => {

let num = 0;

for (let i = 0; i < 10; i++) {

console.log(i);

num += i;

}

resp.json(`The sum is: ${num}`);

});

//Huge computation with on the parent process

app.get("/normal", (req, resp, next) => {

let num = 0;

for (let i = 0; i < 1e4; i++) {

console.log(i);

num += i;

}

resp.json(`The sum is: ${num}`);

});

// Huge computation with forked child process

app.get("/slow", (req, resp, next) => {

// FORK A CHILD PROCESS TO HANDLE THE COMPUTATION

const compute = fork("bigComputation.js");

compute.send("start");

// LISTEN FOR THE MESSAGE EVENT ON THE CHILD PROCESS

compute.on("message", (result) => {

resp.send(`Result at last: ${result}`);

});

});

app.listen(PORT, (err) => {

if (err) throw err;

console.log(`We are listening at port ${PORT}`);

});

In the code above we created a new server which has three endpoints (normal, fast and slow). The normal endpoint performs a long computation without using a child process, the fast endpoint does a simple calculation and the slow endpoint forks a new child process to handle the same huge computation handeled by the normal endpoint.

If you run the server and call the normal endpoint (http://localhost:3000/normal) simulteneosly with the fast endpoint (http://localhost:3000/fast). You will notice that the huge compuation kept the server busy and didnt release it until it was done calculating, which made the fast endpoint, that only needs to perform a simple calculation wait on the queue until it was done.

On the other hand, if you call the slow endpoint (http://localhost:3000/slow) simulteneously with the fast endpoint (http://localhost:3000/fast). You will notice that, while the long computation was still running, the server could still attend to the slow endpoint. Unlike the former, a child-process is forked and passed the path to our bigComputation.js file. The forked process handles the huge computation while the server continues with its usual processes and when the child process is done with the compution, it sends a signal message to the parent process which is then sent to the client that requested it. With this approach, the http server could handle a huge computation in parallel with other requests without keeping the requests on a long waiting queue, thereby increasing the speed and performance of the server.

So far, we have been able to scale our nodeJs server to handle multiple request and huge computations without keeping other requests on hold.

I hope you were able to follow along up to this point and I also hope this was helpful too. For further reading you may want to checkout the cluster and child-process documentation.

Thanks for reading...